据说这东西屁用没有。所以我鸽了,没有后续了。

Nostalgebraist. 2020. logit lens. interpreting GPT

https://www.alignmentforum.org/posts/AcKRB8wDpdaN6v6ru/interpreting-gpt-the-logit-lens

对预测下一个token的研究。

1、它这里用了一个探针技术,叫做 Logit Lens。由于GPT是在最后一层才输出logits,这里选择每一个layer之后增加一个最终的logits层,看中间层之后GPT相信什么。

2、作者认为,随着层数增加,GPT更像是在中间某个时刻对token形成了较好的猜测,后面的层完善这些猜测。(虽然很多很无意义)

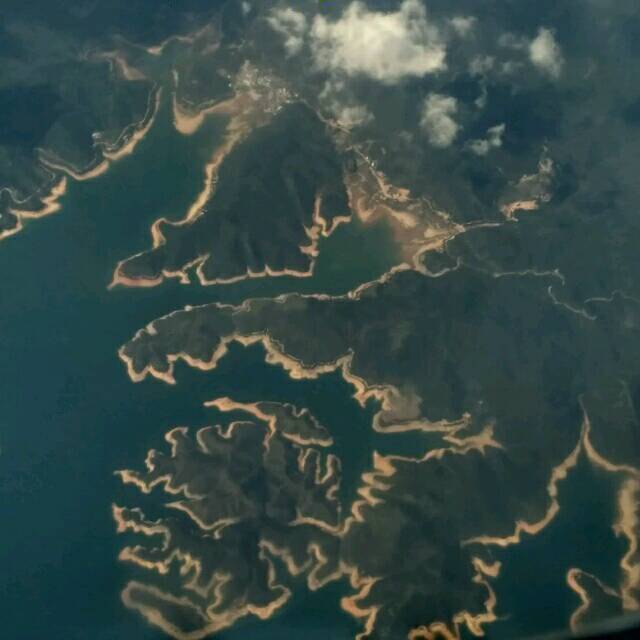

最终输出的rank1在中间的位置排序

Nora Belrose, Zach Furman, Logan Smith, Danny Ha lawi, Igor Ostrovsky, Lev McKinney, Stella Bider man, and Jacob Steinhardt. 2023. Eliciting latent predictions from transformers with the tuned lens.

这里用了一种新的探针技术叫 tune lens,相当于是对上一种探针技术的改进。

logit lens:在一个冻结的预训练模型中的每个块之前训练一个仿射过程

这篇文章说:logit lens首先是在很多模型上有不同的效果,其次是有不少bias(?)没有太明白。文章似乎是觉得

就是原来是

现在是

我不好说,也许有点用?

Patchscopes: A unifying framework for inspecting hidden representations of language models

一个用LLM来解释LLM内部神经元模式的框架。感觉没什么用。

https://arxiv.org/pdf/2401.01967

Weights, Neurons, Sub-networks or Representations. This classification organizes interpretability methods by seeing which part of the computational graph that method helps to explain: weights, neurons, sub-networks, or latent representations

(Räuker et al., 2023)

Edward J. Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, and Weizhu Chen. 2021. Lora: Low-rank adaptation of large language models.

Alexander Yom Din, Taelin Karidi, Leshem Choshen, and Mor Geva. 2023. Jump to conclusions: Short cutting transformers with linear transformations.

Nora Belrose, Zach Furman, Logan Smith, Danny Ha lawi, Igor Ostrovsky, Lev McKinney, Stella Bider man, and Jacob Steinhardt. 2023. Eliciting latent predictions from transformers with the tuned lens.

Nelson Elhage, Neel Nanda, Catherine Olsson, Tom Henighan†, Nicholas Joseph†, Ben Mann†, Amanda Askell, Yuntao Bai, Anna Chen, Tom Conerly, Nova DasSarma, Dawn Drain, Deep Ganguli, Zac Hatfield-Dodds, Danny Hernandez, Andy Jones, Jackson Kernion, Liane Lovitt, Kamal Ndousse, Dario Amodei, Tom Brown, Jack Clark, Jared Ka plan, Sam McCandlish, and Chris Olah‡. 2021. A mathematical framework for transformer circuits.